Abstract

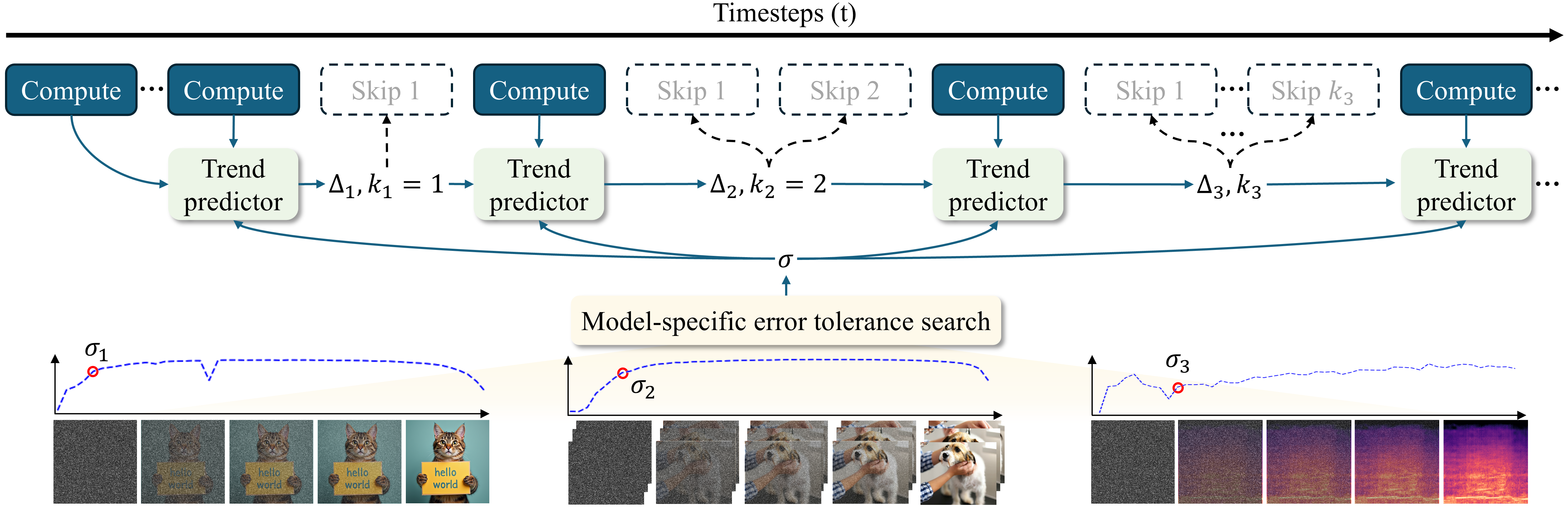

Diffusion models have achieved remarkable generative quality but remain bottlenecked by costly iterative sampling. Recent training-free methods accelerate diffusion process by reusing model outputs. However, these methods ignore denoising trends and lack error control for model-specific tolerance, leading to trajectory deviations under multi-step reuse and exacerbating inconsistencies in the generated results. To address these issues, we introduce Error-aware Trend Consistency (ETC), a framework that (1) introduces a consistent trend predictor that leverages the smooth continuity of diffusion trajectories, projecting historical denoising patterns into stable future directions and progressively distributing them across multiple approximation steps to achieve acceleration without deviating; (2) proposes a model-specific error tolerance search mechanism that derives corrective thresholds by identifying transition points from volatile semantic planning to stable quality refinement. Experiments show that ETC achieves a 2.65× acceleration over FLUX with negligible (-0.074 SSIM score) degradation of consistency.

Method

Overview of the proposed ETC. ETC leverages all historical model outputs to estimate future trends and dynamically adjusts approximation frequency according to each model’s error tolerance limit. Trend predictor compute weighted projections of cross-step changes in model outputs and dynamically extends or contracts the approximation window based on whether deviations between projected trends and periodic model inferences remain within the model’s corrective capacity. Model-specific error tolerance search quantify the perceptual influence of deviation perturbations on generation quality and derive critical transition points from volatile semantic planning to smooth quality refinement phases that reflect model limits for error correction.

Results

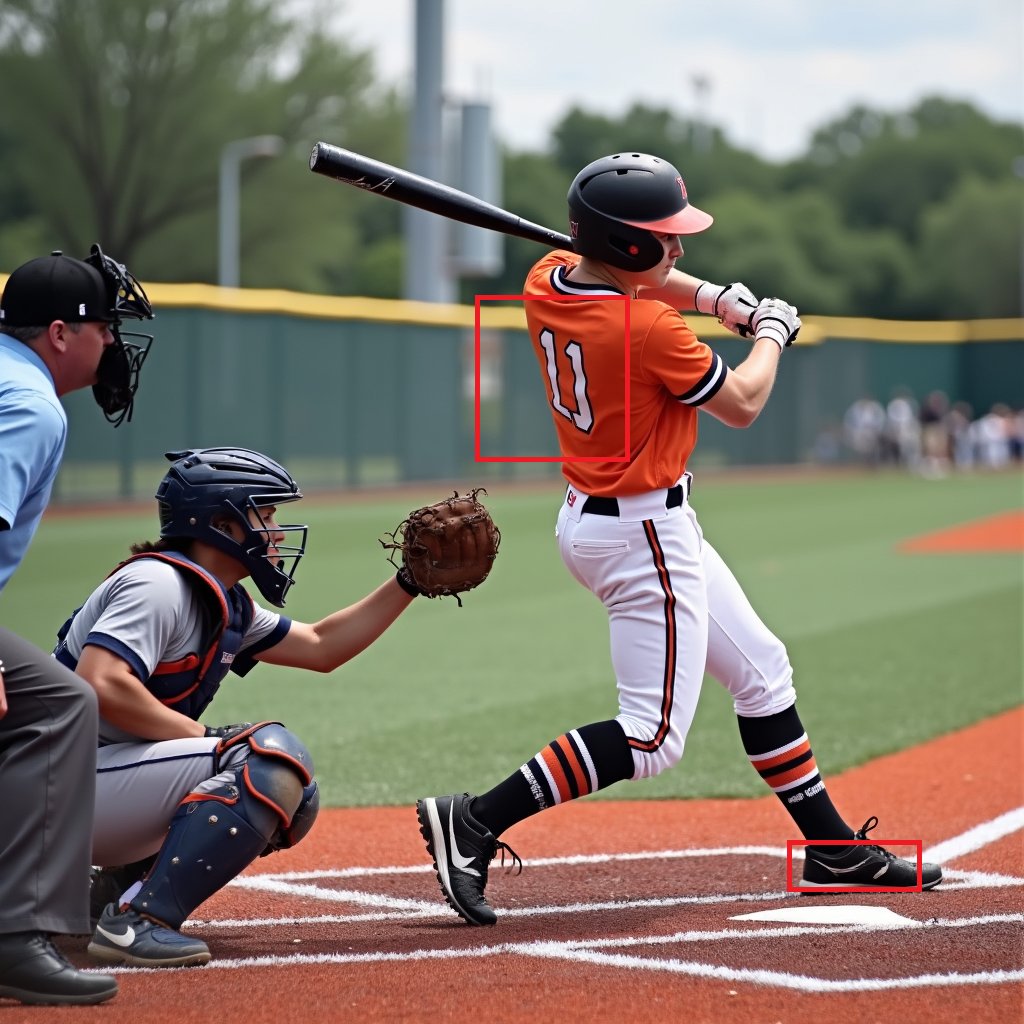

SDXL-Base

|

|

|

|

|---|---|---|---|

Origin (9.28s) |

AdaptiveDiff (4.75s) |

SADA (5.52s) |

ETC (4.32s) |

|

|

|

|

|---|---|---|---|

Origin (9.31s) |

AdaptiveDiff (4.73s) |

SADA (5.16s) |

ETC (4.72s) |

|

|

|

|

|---|---|---|---|

Origin (9.31s) |

AdaptiveDiff (4.73s) |

SADA (5.20s) |

ETC (4.60s) |

|

|

|

|

|---|---|---|---|

Origin (9.10s) |

AdaptiveDiff (4.62s) |

SADA (5.12s) |

ETC (4.42s) |

|

|

|

|

|---|---|---|---|

Origin (9.10s) |

AdaptiveDiff (4.58s) |

SADA (4.94s) |

ETC (4.43s) |

|

|

|

|

|---|---|---|---|

Origin (9.21s) |

AdaptiveDiff (5.11s) |

SADA (4.90s) |

ETC (4.46s) |

|

|

|

|

|---|---|---|---|

Origin (9.05s) |

AdaptiveDiff (4.82s) |

SADA (5.18s) |

ETC (4.52s) |

|

|

|

|

|---|---|---|---|

Origin (9.22s) |

AdaptiveDiff (4.83s) |

SADA (5.41s) |

ETC (4.61s) |

|

|

|

|

|---|---|---|---|

Origin (9.10s) |

AdaptiveDiff (4.76s) |

SADA (5.15s) |

ETC (4.55s) |

|

|

|

|

|---|---|---|---|

Origin (9.05s) |

AdaptiveDiff (4.85s) |

SADA (4.95s) |

ETC (4.53s) |

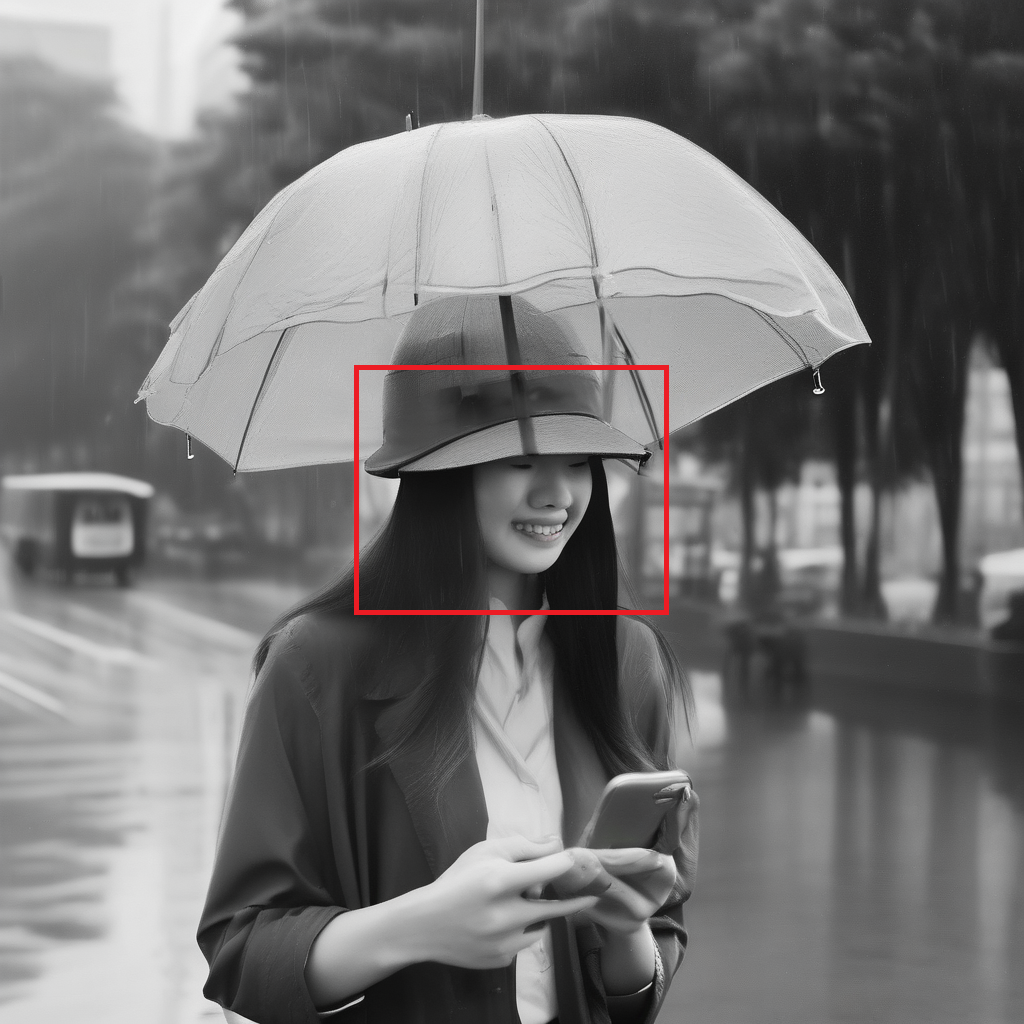

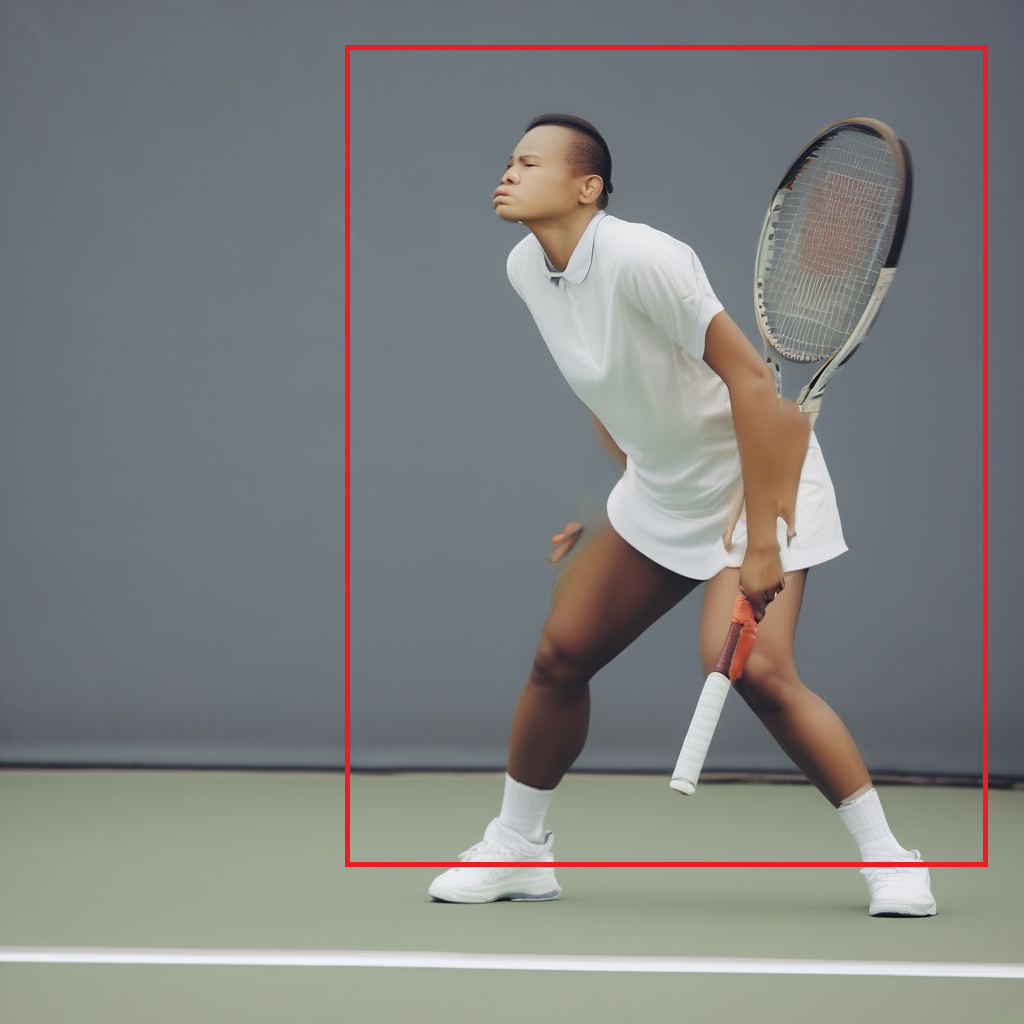

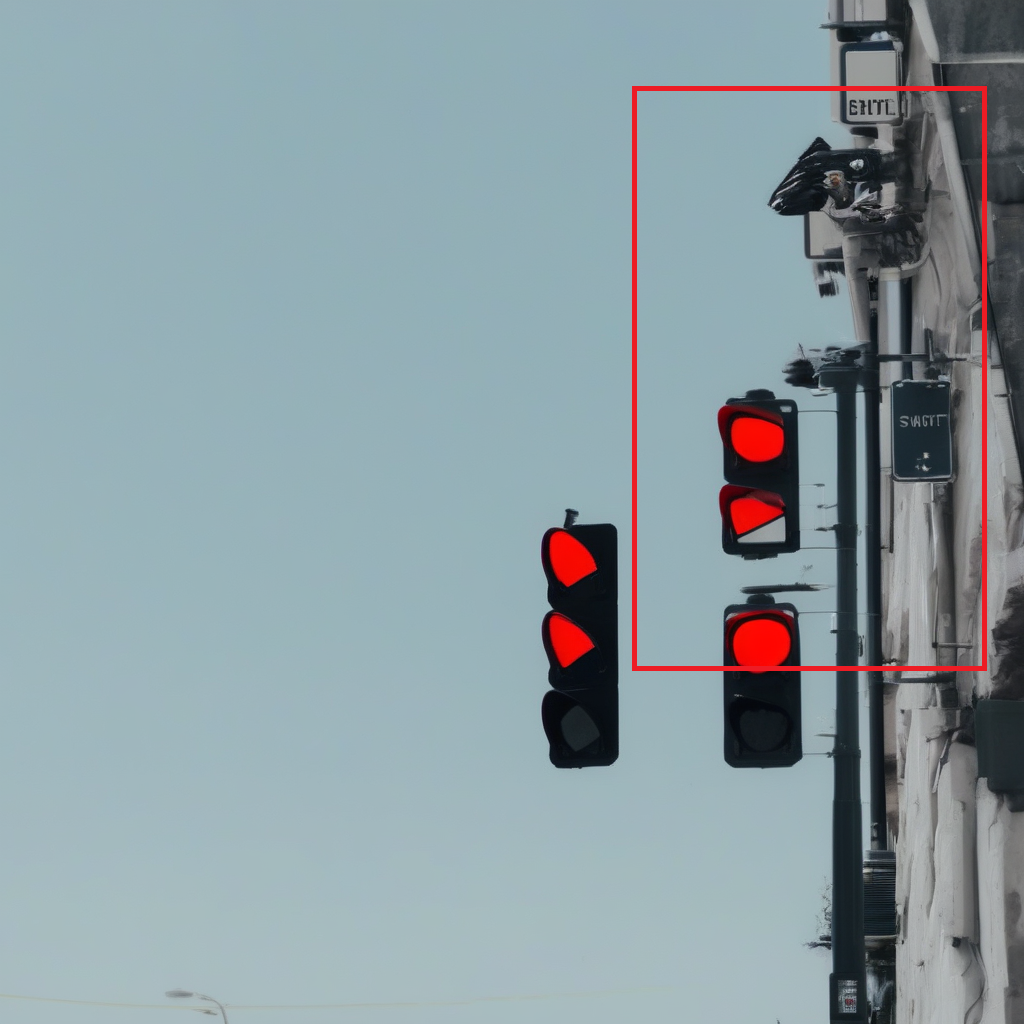

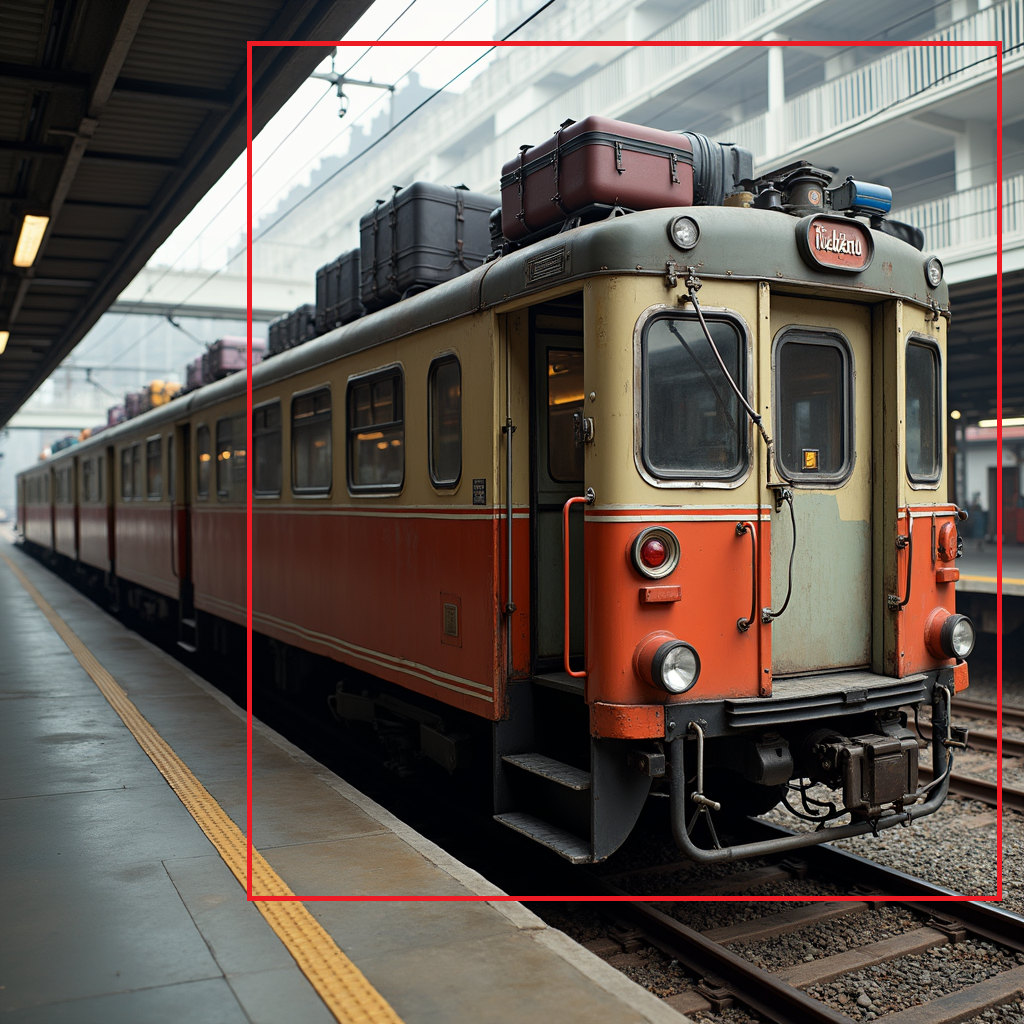

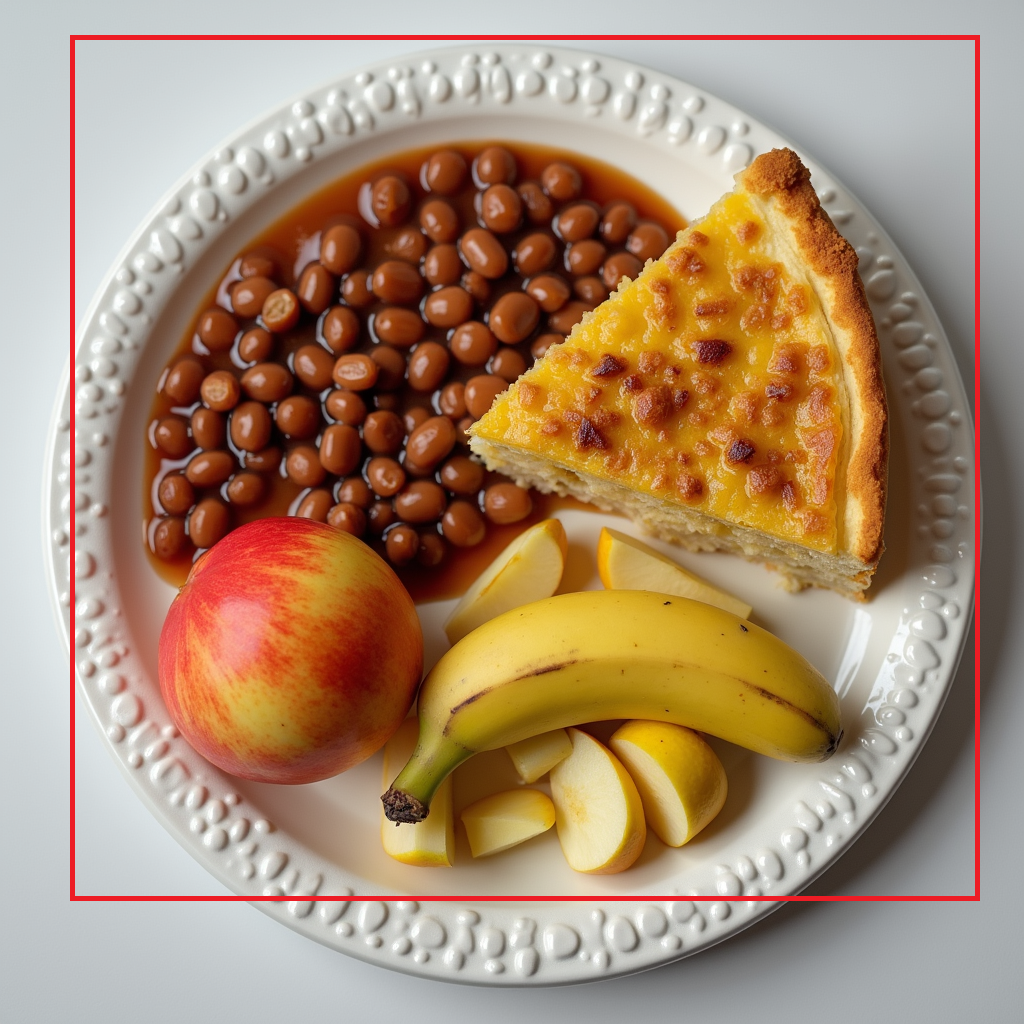

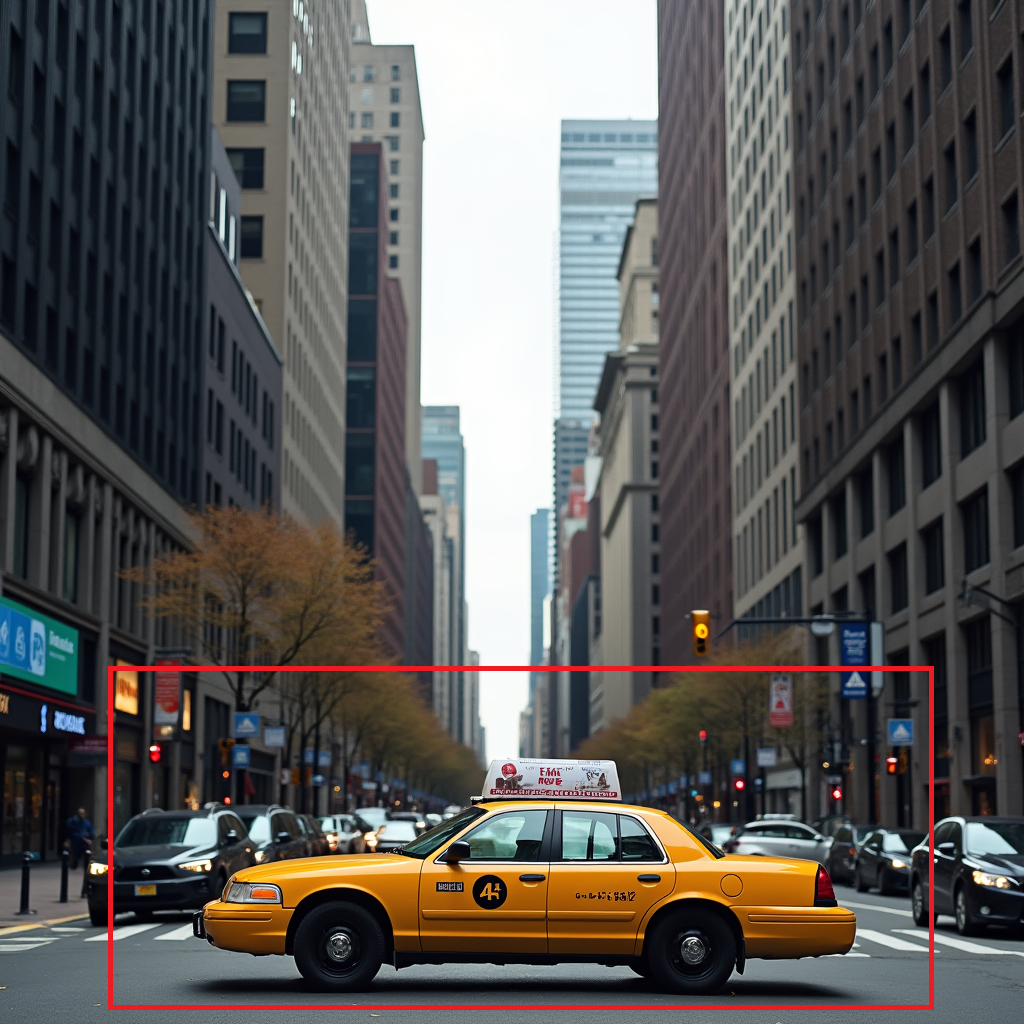

FLUX

|

|

|

|

|---|---|---|---|

Origin (29.07s) |

TeaCache (11.91s) |

SADA (12.95s) |

ETC (11.52s) |

|

|

|

|

|---|---|---|---|

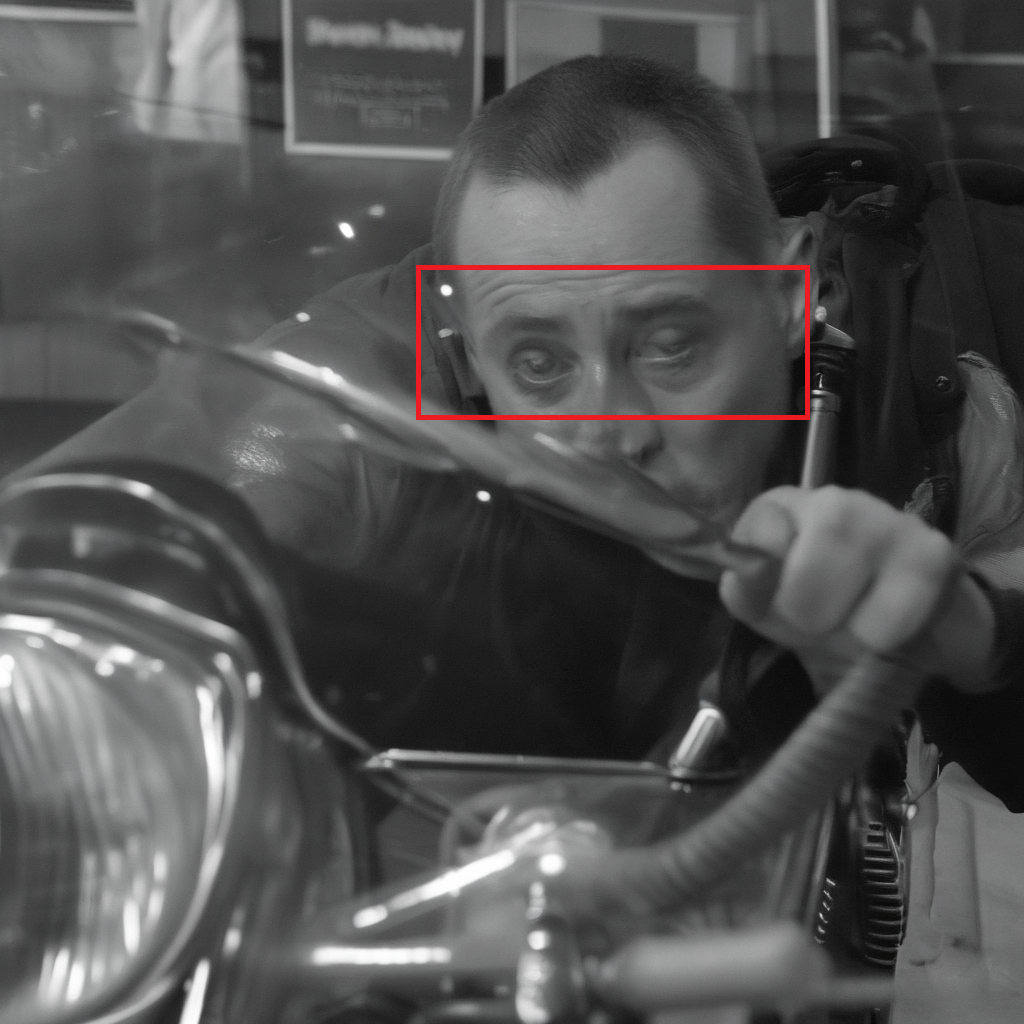

Origin (29.15s) |

TeaCache (11.84s) |

SADA (14.01s) |

ETC (10.66s) |

|

|

|

|

|---|---|---|---|

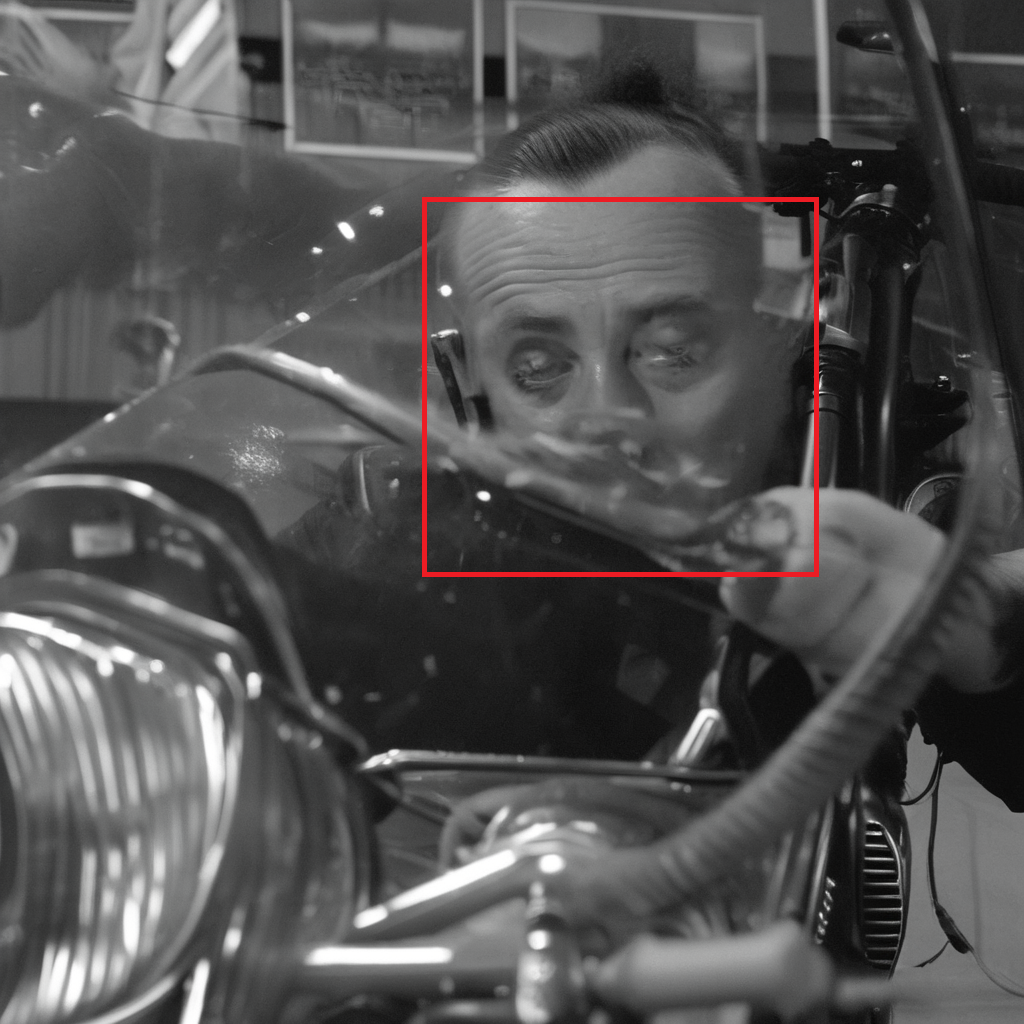

Origin (28.95s) |

TeaCache (11.84s) |

SADA (15.80s) |

ETC (10.92s) |

|

|

|

|

|---|---|---|---|

Origin (28.72s) |

TeaCache (11.73s) |

SADA (14.65s) |

ETC (11.01s) |

|

|

|

|

|---|---|---|---|

Origin (29.25s) |

TeaCache (11.80s) |

SADA (14.00s) |

ETC (11.13s) |

|

|

|

|

|---|---|---|---|

Origin (28.97s) |

TeaCache (12.07s) |

SADA (14.58s) |

ETC (10.84s) |

|

|

|

|

|---|---|---|---|

Origin (28.97s) |

TeaCache (11.96s) |

SADA (14.92s) |

ETC (10.66s) |

|

|

|

|

|---|---|---|---|

Origin (28.95s) |

TeaCache (11.92s) |

SADA (14.61s) |

ETC (10.82s) |

|

|

|

|

|---|---|---|---|

Origin (28.69s) |

TeaCache (11.87s) |

SADA (12.84s) |

ETC (10.94s) |

|

|

|

|

|---|---|---|---|

Origin (29.04s) |

TeaCache (11.38s) |

SADA (14.07s) |

ETC (10.86s) |

OpenSora-1.2

|

|

|

|

|---|---|---|---|

Origin (45.38s) |

TeaCache (22.03s) |

MagCache (21.25s) |

ETC (21.10s) |

|

|

|

|

|---|---|---|---|

Origin (45.12s) |

TeaCache (21.95s) |

MagCache (21.09s) |

ETC (20.87s) |

|

|

|

|

|---|---|---|---|

Origin (45.30s) |

TeaCache (22.01s) |

MagCache (21.52s) |

ETC (20.98s) |

|

|

|

|

|---|---|---|---|

Origin (45.33s) |

TeaCache (22.17s) |

SADA (21.53s) |

ETC (21.09s) |

|

|

|

|

|---|---|---|---|

Origin (45.10s) |

TeaCache (22.38s) |

MagCache (21.97s) |

ETC (21.23s) |

|

|

|

|

|---|---|---|---|

Origin (45.28s) |

TeaCache (22.15s) |

MagCache (21.58s) |

ETC (21.01s) |

|

|

|

|

|---|---|---|---|

Origin (45.30s) |

TeaCache (21.75s) |

MagCache (20.89s) |

ETC (20.13s) |

|

|

|

|

|---|---|---|---|

Origin (45.19s) |

TeaCache (21.87s) |

MagCache (21.28s) |

ETC (20.56s) |

|

|

|

|

|---|---|---|---|

Origin (45.31s) |

TeaCache (21.88s) |

MagCache (21.47s) |

ETC (19.53s) |

|

|

|

|

|---|---|---|---|

Origin (45.22s) |

TeaCache (21.80s) |

MagCache (21.54s) |

ETC (21.08s) |

Wan-2.1

|

|

|

|

|---|---|---|---|

Origin (971.28s) |

TeaCache (518.21s) |

MagCache (400.97s) |

ETC (406.5s) |

|

|

|

|

|---|---|---|---|

Origin (970.37s) |

TeaCache (494.51s) |

MagCache (406.97s) |

ETC (390.22s) |

|

|

|

|

|---|---|---|---|

Origin (971.08s) |

TeaCache (508.93s) |

MagCache (411.55s) |

ETC (402.59s) |

|

|

|

|

|---|---|---|---|

Origin (971.14s) |

TeaCache (489.10s) |

SADA (407.11s) |

ETC (382.05s) |

|

|

|

|

|---|---|---|---|

Origin (971.22s) |

TeaCache (488.86s) |

MagCache (407.68s) |

ETC (380.09s) |

|

|

|

|

|---|---|---|---|

Origin (969.69s) |

TeaCache (489.02s) |

MagCache (395.21s) |

ETC (364.87s) |

|

|

|

|

|---|---|---|---|

Origin (970.63s) |

TeaCache (488.8s) |

MagCache (470.77s) |

ETC (341.27s) |

|

|

|

|

|---|---|---|---|

Origin (970.94s) |

TeaCache (515.53s) |

MagCache (407.07s) |

ETC (399.51s) |

|

|

|

|

|---|---|---|---|

Origin (969.69s) |

TeaCache (510.19s) |

MagCache (407.66s) |

ETC (381.84s) |

|

|

|

|

|---|---|---|---|

Origin (970.82s) |

TeaCache (488.46s) |

MagCache (407.73s) |

ETC (383.54s) |